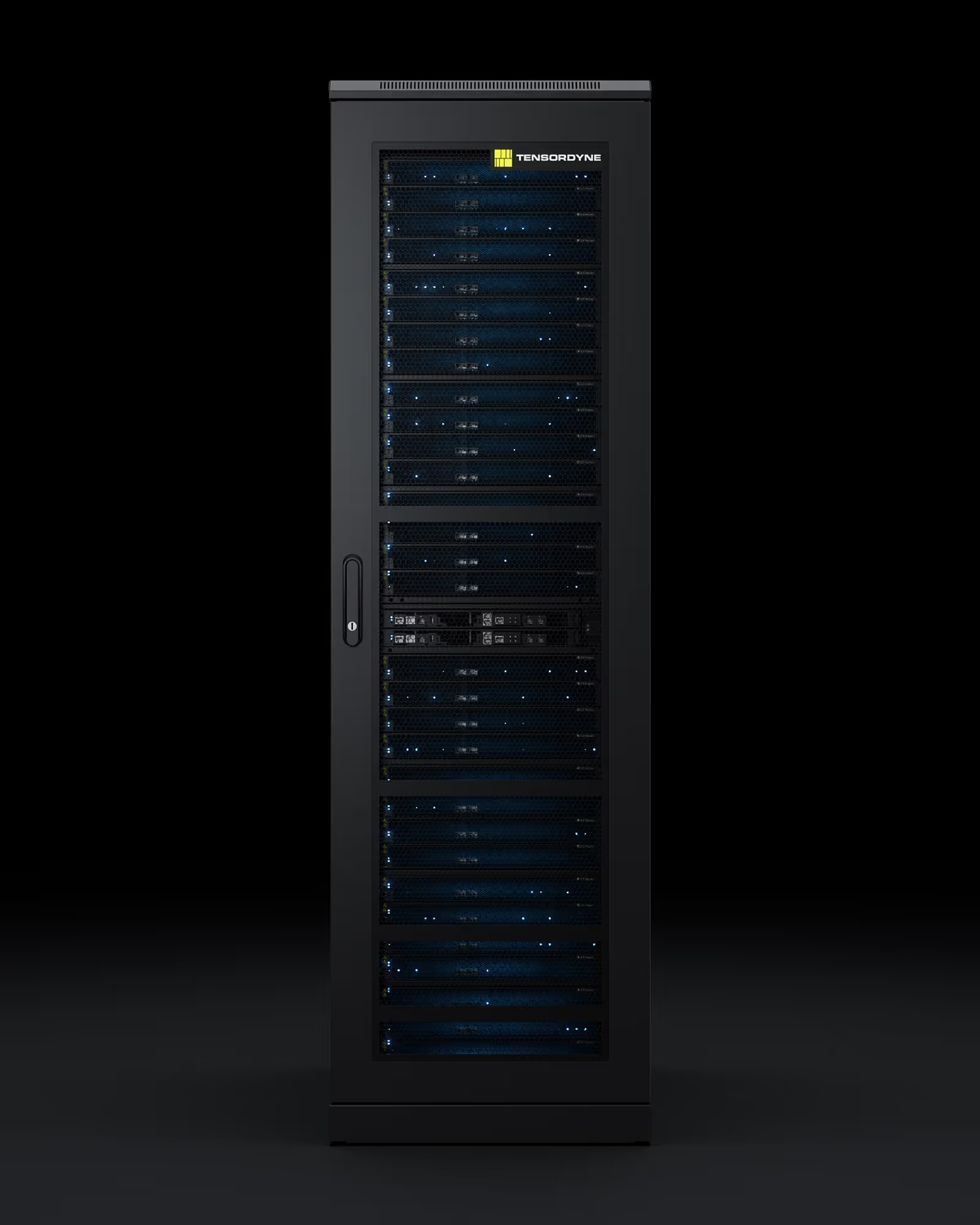

Tensordyne

Inference

System

The Tensordyne inference system is the first AI-inference platform built on our proprietary logarithmic math number system, delivering super-node capacity at a fraction of the energy, space, and cost. The approach has already been proven in silicon, establishing the foundation for our end-to-end design, from math to chip to interconnect. Tensordyne inference is drop-in datacenter compatible, air-cooled, and scales without grid strain, enabling faster, more affordable, and sustainable generative AI.

Developed in collaboration with

intro

3,000,000 Tokens per Second* 1/3 Capex and 1/8 Opex of Leading Solutions

*Llama3.3 70B

Tensordyne inference combines industry‑leading compute density, ultra‑fast, high‑bandwidth memory, and a lightning‑speed interconnect fabric to outpace competing solutions. Thanks to exceptional energy efficiency, you’ll see lower operating costs, while superior die yields and compact architecture slash capital expenses—delivering more tokens per dollar per watt than anything else on the market.

Performance

Key Stats

Highest per Rack Throughput

Deepseek R1

1,700,000

>1.7M Tokens / sec per rack

>10K Concurrent Users

Deepseek R1

$ 0.05

As low as $ 0.05 / 1M Tokens

Highest per Rack Throughput

Llama 3.3-70B

3,000,000

>3M Tokens / sec per rack

>10K concurrent users

Llama 3.3-70B

$ 0.02

As low as $ 0.02 / 1M Tokens

First 4K30 AI Video Generation in Real Time

Magi-1

30 FPS

< 1 SEC GENERATION TIME FOR 30 FPS IN UHD

Magi-1

$ 0.30

LESS THAN $ 0.30 / 10 SEC CLIP

Software

Push-Button

Compilaton

Browse & Get Started

Choose from a wide variety of ready-to-run LLM, diffusion, vision, and audio models. Check out their throughput, latency, and $/token on Tensordyne’s inference system and directly deploy with a few commands.

Customize & Compile

Leverage the SDK to swap kernels, change the quantization strategy, or add custom pre‑/post‑processing, then compile with our graph compiler. The bit‑exact Tensordyne logarithmic math emulator returns predicted accuracy, even without a Tensordyne inference system available.

Deploy & Monitor

Deploy your compiled model on Tensordyne hardware as a Kubernetes-native service. Tensordyne inference system provides industry-standard observability interfaces and metrics for power, thermals, request latency, and token throughput.

Quality

16-bit Precision at the Power of 4-bit

Efficiency shouldn't come at the cost of accuracy. That's why Tensordyne fundamentally reinvented AI compute, unlocking a world of opportunities.

Language Models

Image Models

Video Models

SDK

Designed for

Boundless Scale

Tensordyne's inference system brings together Tensordyne logarithmic math compute with terabytes of HBM3e, interconnected by our ultra-high-bandwidth, any-to-any interconnect. Seamlessly scale from a single chip to hundreds within one instance - powering multi‑trillion‑parameter models at full throttle or delivering real‑time 4K video from a single rack.