The

Zeroth

Layer

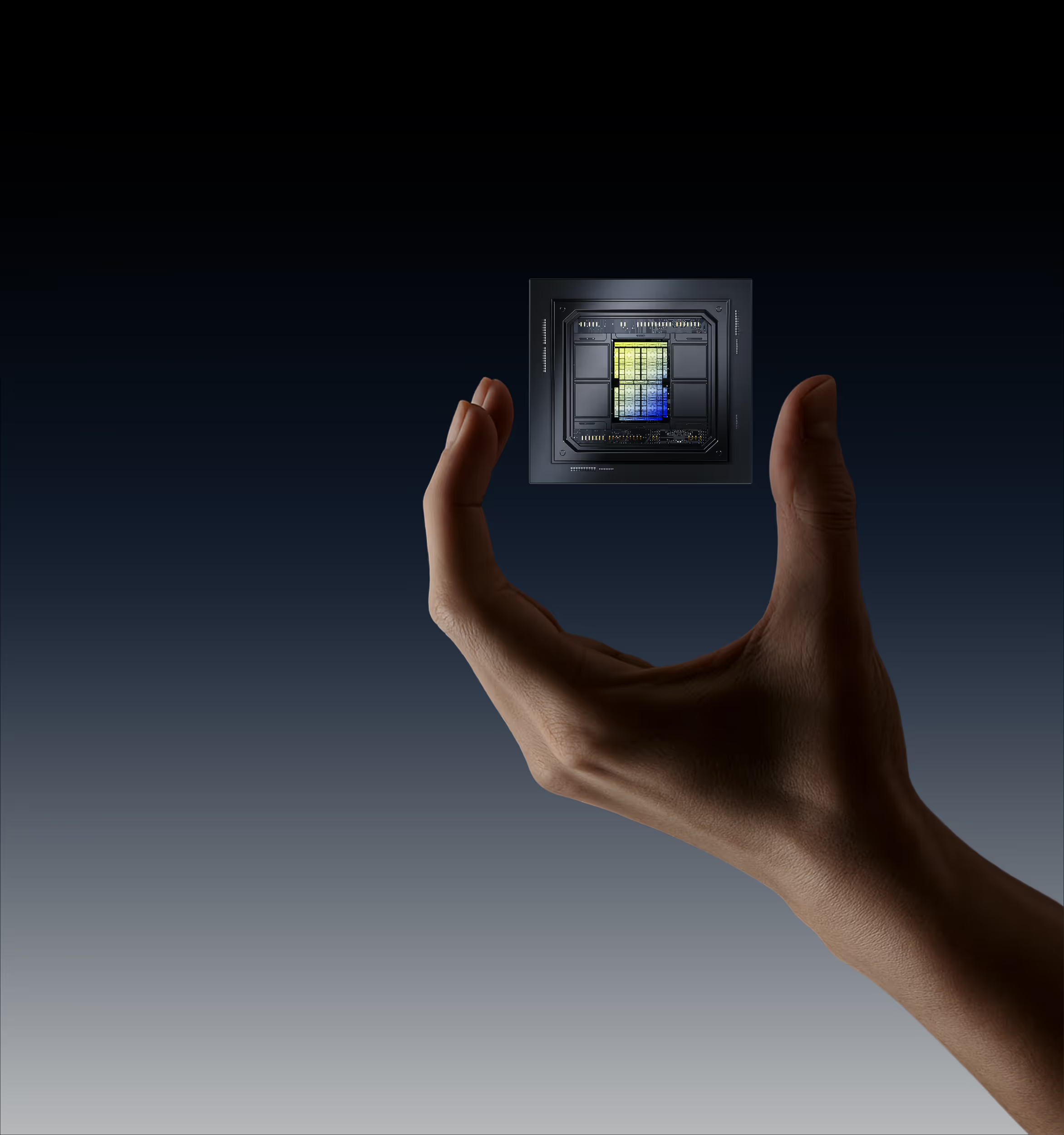

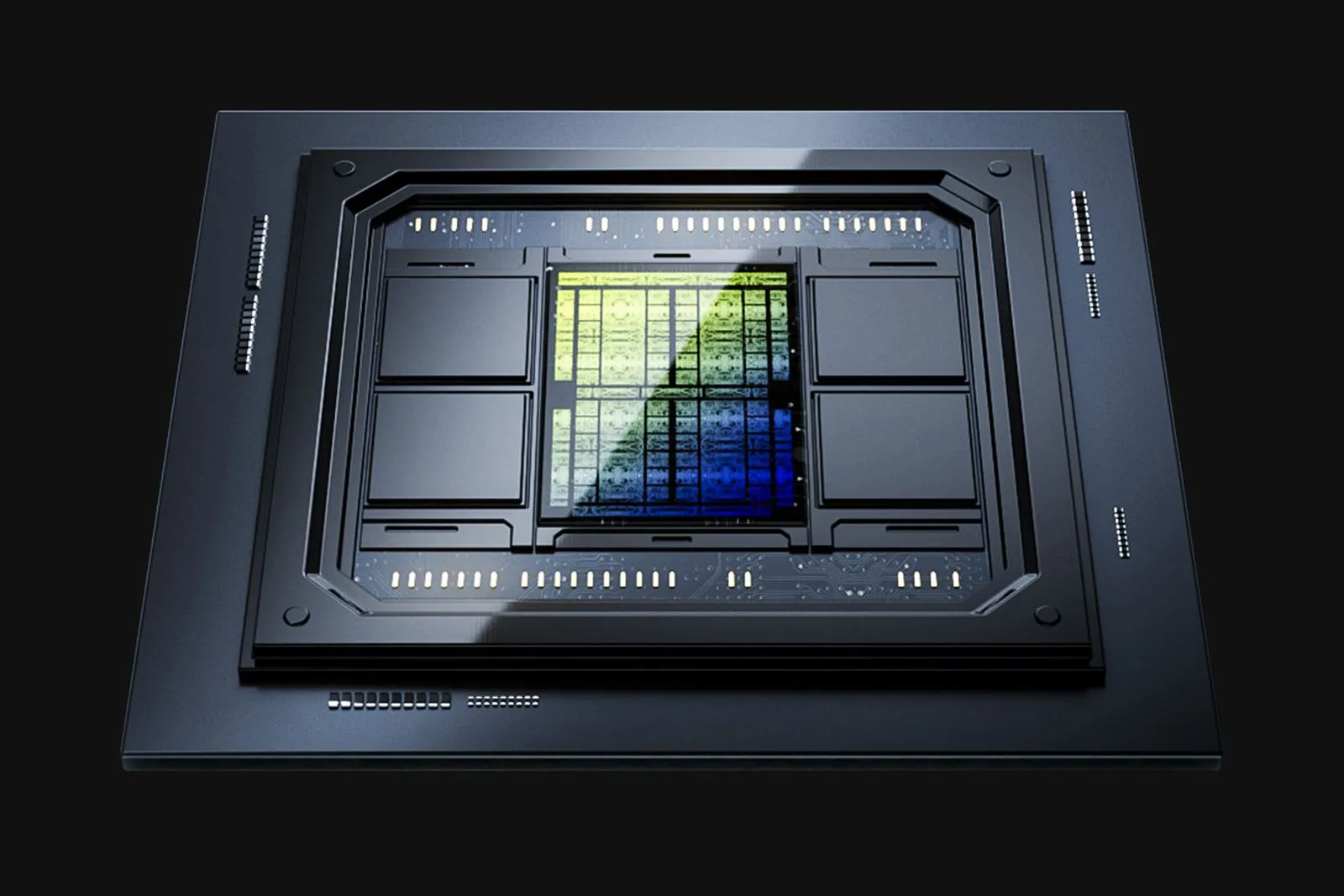

Purpose-built logarithmic math, hard-wired in 3 nm silicon, ready for the largest models and real-time 4K video.

Log MAth

Cut Chip Compute Area with Native Log Math

By replacing every multiply with lightweight log-math adders, we free chip compute area versus today’s FP8/INT8 GPUs. Fewer transistors means chips run cooler and more energy-efficient.

FP16 Multiply

Tensordyne Log Math 16bit Multiply

Silicon

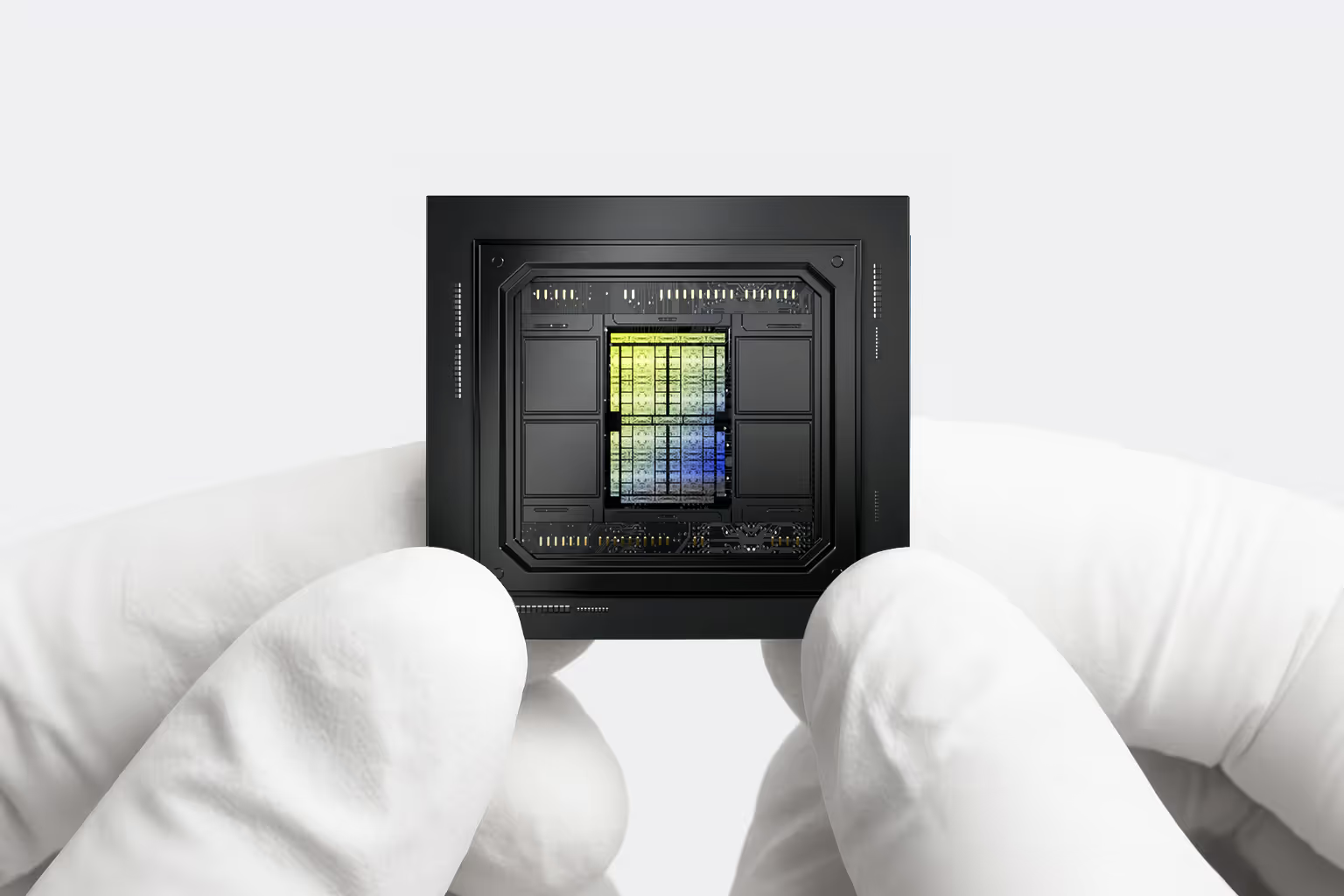

Silicon for Beast-Mode Performance and Economics

Fewer transistors frees up chip real estate. This allows us to pack in extra tensor engines, significantly more high-bandwidth SRAM & HBM3e, plus any-to-any fabric that feeds data at full throttle so the beast never starves. All of this in a smaller chip means tighter yield nodes, resulting in better hardware economics.

Quality

Uncompromised Accuracy, Full Dynamic Range

Tensordyne Log Math delivers the ideal number system for AI - achieving lower error compared to floating-point while preserving the dynamic range. Accuracy greater than 99.9% relative to any trained language, vision, or video model comes standard. With Tensordyne Log Math, you gain uncompromised precision and efficiency. Say good-bye to tradeoffs and model degradation that come from using ultra‑low‑bit formats just to meet power budgets.

Language Models

Image Models

Effiency

Unlocking High-Res Real-Time Video

Higher resolution real-time video thrives on log math’s high dynamic range and in some cases even better than native resolution. Use cases previously out of reach are now technically possible and economically feasible.

Future outlook

Raising the Roof for AI

Shrinking process nodes and pruning weights help—but they’re incremental. At some point, the industry needs a revolution. Logarithmic compute not only raises the roof for AI, but also reshapes the scaling curve.