Etched Example Post + Table of Contents

Transformers are more than meets the eye

In 2022, we made a bet that transformers would take over the world.

We’ve spent the past two years building Sohu, the world’s first specialized chip (ASIC) for transformers (the “T” in ChatGPT).

By burning the transformer architecture into our chip, we can’t run most traditional AI models: the DLRMs powering Instagram ads, protein-folding models like AlphaFold 2, or older image models like Stable Diffusion 2. We can’t run CNNs, RNNs, or LSTMs either.

But for transformers, Sohu is the fastest chip of all time. It’s not even close.

With over 500,000 tokens per second in Llama 70B throughput, Sohu lets you build products impossible on GPUs.

Sohu is an order of magnitude faster and cheaper than even NVIDIA’s next-generation Blackwell (B200) GPUs.

Today, every state-of-the-art AI model is a transformer: ChatGPT, Sora, Gemini, Stable Diffusion 3, and more. If transformers are replaced by SSMs, RWKV, or any new architecture, our chips will be useless.

But if we’re right, Sohu will change the world. Here’s why we took this bet.

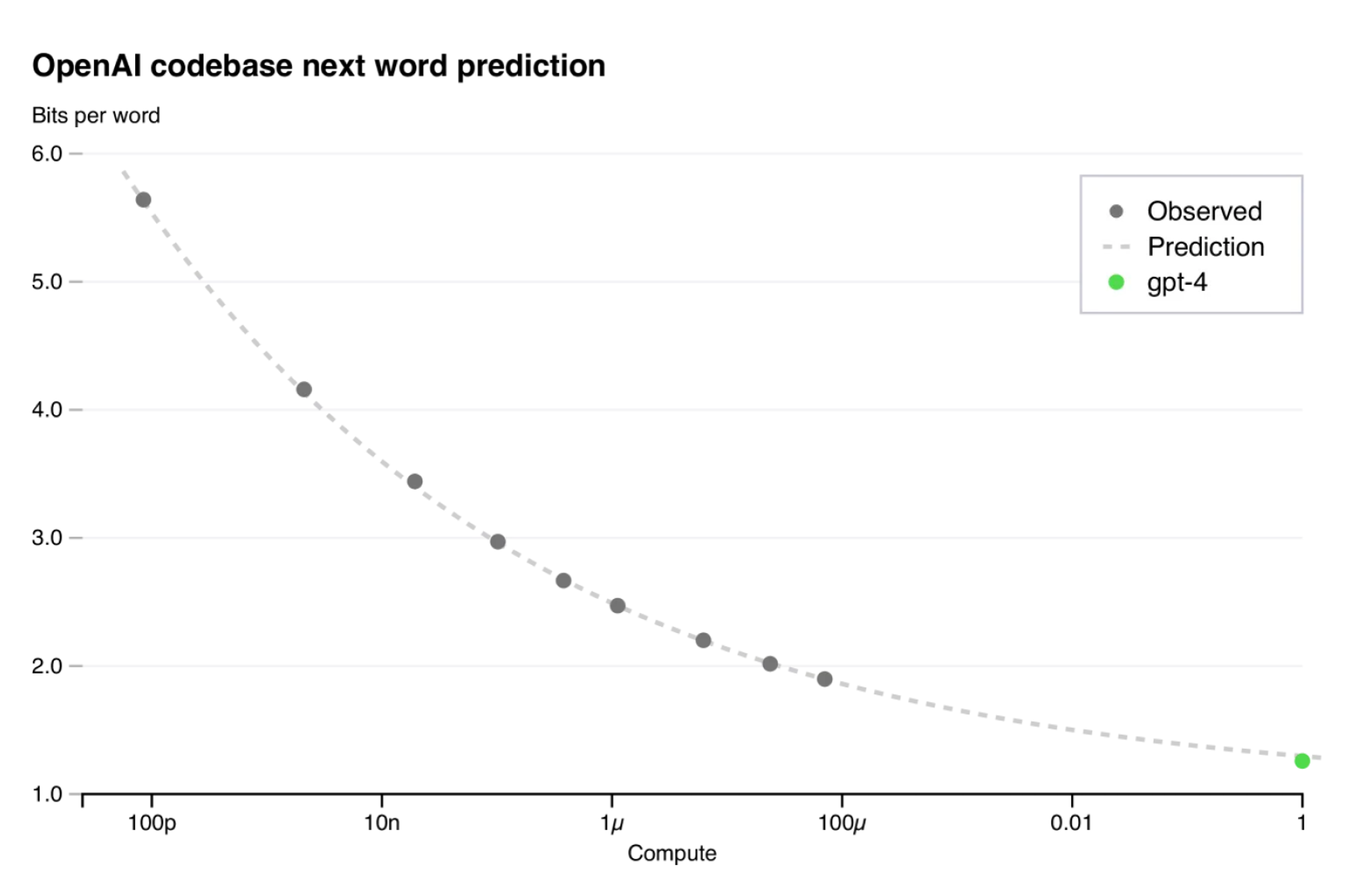

Scale is all you need for superintelligence

In five years, AI models became smarter than humans on most standardized tests.

How? Because Meta used 50,000x more compute to train Llama 400B (2024 SoTA, smarter than most humans) than OpenAI used on GPT-2 (2019 SoTA).

By feeding AI models more compute and better data, they get smarter. Scale is the only trick that’s continued to work for decades, and every large AI company (Google, OpenAI / Microsoft, Anthropic / Amazon, etc.) is spending more than $100 billion over the next few years to keep scaling. We are living in the largest infrastructure buildout of all time.

"I think [we] can scale to the $100B range, … we’re going to get there in a few years" - Dario Amodei, Anthropic CEO

"Scale is really good. When we have built a Dyson Sphere around the sun, we can entertain the discussion that we should stop scaling, but not before then" - Sam Altman, OpenAI CEO2

Scaling the next 1,000x will be very expensive. The next-generation data centers will cost more than the GDP of a small nation. At the current pace, our hardware, our power grids, and pocketbooks can’t keep up.

We’re not worried about running out of data. Whether via synthetic data, annotation pipelines, or new AI-labeled data sources, we think the data problem is actually an inference compute problem. Mark Zuckerberg4, Dario Amodei5, and Demis Hassabis6 seem to agree.

GPUS are hitting a wall

Santa Clara’s dirty little secret is that GPUs haven’t gotten better, they’ve gotten bigger. The compute (TFLOPS) per area of the chip has been nearly flat for four years.

.png)

With Moore’s law slowing, the only way to improve performance is to specialize.

Specialized Chips are Inevitable

Before transformers took over the world, many companies built flexible AI chips and GPUs to handle the hundreds of various architectures. To name a few:

- Nvidia GPUs

- Google's TPUs

- AMD's accelerators

No one has ever built an algorithm-specific AI chip (ASIC). Chip projects cost $50-100M and take years to bring to production. When we started, there was no market.

Suddenly, that’s changed:

- Unprecedented Demand: Before ChatGPT, the market for transformer inference was ~$50M, and now it’s billions. All big tech companies use transformer models (OpenAI, Google, Amazon, Microsoft, Facebook, etc.).

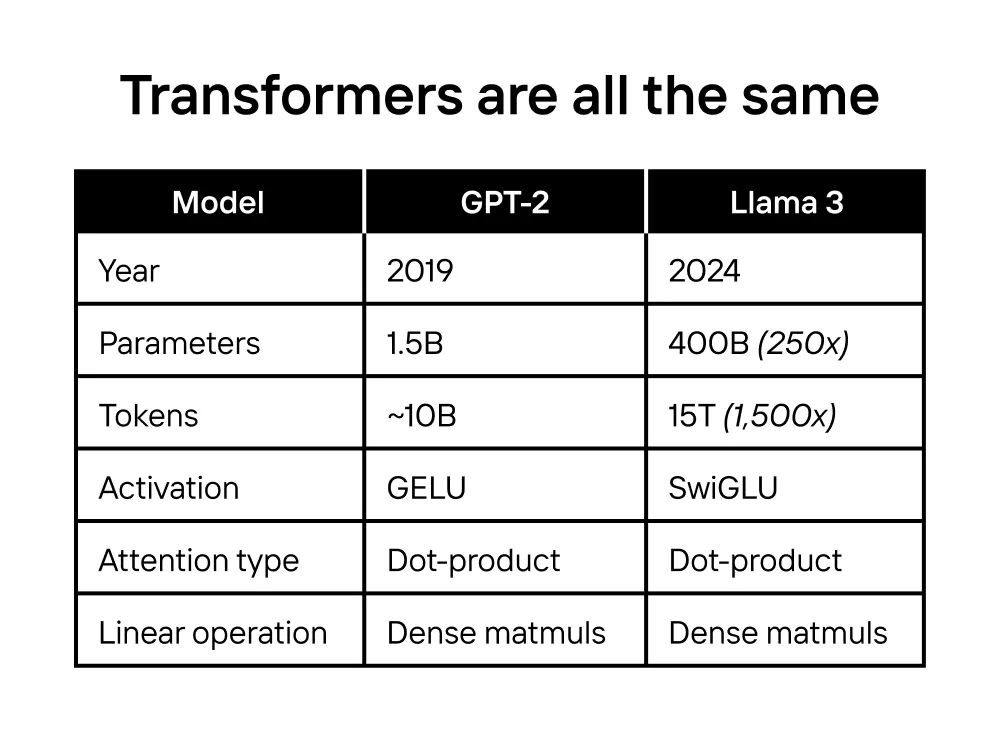

- Convergence on Architecture: AI models used to change a lot. But since GPT-2, state-of-the-art model architectures have remained nearly identical! OpenAI’s GPT-family, Google’s PaLM, Facebook’s LLaMa, and even Tesla FSD are all transformers.

When models cost $1B+ to train and $10B+ for inference, specialized chips are inevitable. At this scale, a 1% improvement would justify a $50-100M custom chip project.

In reality, ASICs are orders of magnitude faster than GPUs. When bitcoin miners hit the market in 2014, it became cheaper to throw out GPUs than to use them to mine bitcoin.

With billions of dollars on the line, the same will happen for AI.

Transformers are shockingly similar: tweaks like SwiGLU activations and RoPE encodings are used everywhere: LLMs, embedding models, image inpainting, and video generation.

While GPT-2 to Llama-3 are state-of-the-art (SoTA) models five years apart, they have nearly identical architectures. The only major difference is scale.

Transformers have a huge moat

We believe in the hardware lottery: the models that win are the ones that can run the fastest and cheapest on hardware. Transformers are powerful, useful, and profitable enough to dominate every major AI compute market before alternatives are ready:

- Transformers power every large AI product: from agents to search to chat. AI labs have spent hundreds of millions of dollars in R&D to optimize GPUs for transformers. The current and next-generation state-of-the-art models are transformers.

- As models scale from $1B to $10B to $100B training runs in the next few years, the risk of testing new architectures skyrockets. Instead of re-testing scaling laws and performance, time is better spent building features on top of transformers, such as multi-token prediction.

- Today’s software stack is optimized for transformers. Every popular library (TensorRT-LLM, vLLM, Huggingface TGI, etc.) has special kernels for running transformer models on GPUs. Many features built on top of transformers aren’t easily supported in alternatives (ex. speculative decoding, tree search).

- Tomorrow’s hardware stack will be optimized for transformers. NVIDIA’s GB200s have special support for transformers (TransformerEngine). ASICs like Sohu entering the market mark the point of no return. Transformer killers will need to run on GPUs faster than transformers run on Sohu. If that happens, we’ll build an ASIC for that too!

----

"Published under our former name, Recogni. As of 09/08/2025, Recogni is now Tensordyne."